Introduction

It’s no secret that humans are not always rational. Although innately possessing the capacity for complex and abstract reasoning, strongly-held beliefs and cognitive biases often lead people to use that ability selectively and/or to employ it in the defense of unfounded ideas via specious motivated reasoning.[1][2][3] One of the ways in which this manifests in through the selective rejection of well-supported scientific findings.[4][5] This may take the form of rejecting evolution, denying the anthropogenic component of climate change, or believing that vaccines and genetically engineered foods are dangerous (just to name a handful).

Consequently, people who reject one or more robustly-supported scientific finding on ideological grounds have formulated or co-opted several arguments to defend their beliefs. Although numerous and varying widely in sophistication, they are finite in number, invariably flawed, and many of them are repeated often enough that I think they’re worth knowing how to recognize and refute.

Science Has Been Wrong Before! #CheckmarkScientits!

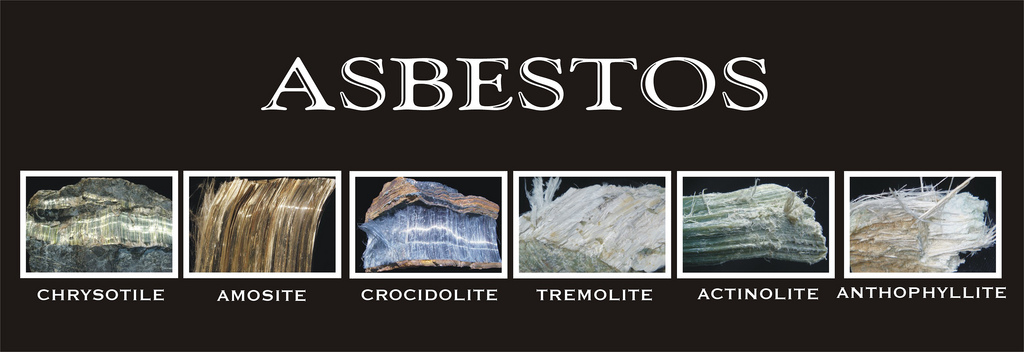

A particularly common family of these arguments are of the form “scientists were wrong about x, therefore they’re probably wrong about y.” Specific examples include “science said smoking was good for you,” or “science said thalidomide was safe,” or “science said the earth was flat,” or “in the 1970s there was a scientific consensus of an imminent ice age,” or “asbestos used to be considered perfectly safe,” or “what about DDT?”

*Obviously, science isn’t a person: it’s a set of approaches to the generation of new knowledge, but that is often how I’ve seen such arguments phrased. In my opinion, focusing too much on rebutting the grammatical issue distracts from the deeper flaws in the argument, and is ultimately an inferior argumentative strategy.

Don’t worry if you aren’t familiar with all of these. They’re just examples. The point is that they share a common underlying theme and they’re all flawed. In nearly all cases, these arguments are being used to justify the rejection of some more current scientific conclusion that the claimant simply doesn’t like. The smoking, thalidomide, asbestos, and DDT examples are used when the claimant doesn’t accept the science behind something researchers have determined is generally safe under normal circumstances (either for humans and/or for the environment). The 70s global cooling example is used to sow doubt on the veracity of the current scientific consensus on anthropogenic climate change, and the flat earth example is used for a variety of purposes.

Addressing “Scientists Were Wrong” Arguments

There are multiple problematic aspects to these “scientists were wrong” arguments. From a tactical point of view, each time I respond to it, I must make a choice as to which one of its multiple flaws (or combination thereof) I want to emphasize.

On the one hand, I could tear apart the person’s examples. It turns out that most of the cases that people list aren’t very good examples at all: tobacco, thalidomide, the earth being flat, geocentrism, global cooling, asbestos, agent orange, and possibly DDT are all bad arguments that typically rely on some degree of historical revisionism for them to work.[6][7][8][9][10] In some of those examples, the idea never was a subject of strong scientific consensus, and the scientists weren’t actually wrong. Some of the others have more nuanced and circumstantial considerations to their stories which make them weak examples for the purpose the contrarian intends them. Although each example may make for an interesting case study, and I’ve used this approach on occasion, trying to address all such examples can turn into somewhat of a fool’s errand. This is because contrarians can always just dig for more examples while ignoring the flaws that were pointed out in their previous examples. It can also be quite time-consuming to meticulously break each case down, since different examples fail to support the claimant’s thesis for different reasons. Perhaps even more importantly, it’s tangential to the larger issue that past mistakes by scientists do not discredit the scientific method, nor do they establish the epistemic reliability of arbitrarily rejecting well-supported scientific conclusions due to personal feelings. Nevertheless, deconstructing the specific examples is one possible approach.

Alternatively, I can explain the relativity of wrong (as Asimov put it) and point out that old observed facts don’t ever really go away, and explain how that places constraints on which aspects of a scientific theory can change with new evidence and in what ways they can change without contradicting the facts or predictions that the older theory or model did get correct.[11] Wrongness comes in varying degrees, and the progression of scientific knowledge is towards increasing refinement, and/or in some cases a conceptual reframing which preserves certain predictions of the older theory while reinterpreting its meaning. This phenomenon can be understood in analogy with the correspondence principle, as I’ve written about here.[12] Science is a self-correcting enterprise, and that’s among its strengths: not its weaknesses. The main idea with this approach, however, is to emphasize that even when such self-corrections occur, they can’t happen just any old way. What that means is someone relying on future vindication of their (currently unsupported) beliefs on the grounds that the current paradigm could be wrong are ignoring the fact that scientific theories must remain consistent with the evidence. They may account for that evidence differently than preceding theories from a conceptual standpoint, but it can’t just contradict it.

Another option is that I can explain why Bayesian reasoning promises a higher rate of accuracy in our views than so-called pessimistic meta-induction (the view that since scientists were wrong before, they are likely equally wrong now). If you’ve never heard of Bayesian reasoning or pessimistic meta-induction, don’t worry about it. You’re not alone, and it’s not a central focus of this article. Put more plainly, the idea is that tacitly accepting the weight of the evidence in the present moment and later updating our views (if and only if newer evidence warrants doing so) results in us being less wrong less of the time than does rejecting the preponderance of evidence under the unjustified assumption that those are the current scientific conclusions which will later be proved wrong. Notice that none of these people start walking off of the tops of buildings on the grounds that “gravity might be wrong.” This approach is tricky to understand and even trickier to explain concisely. The concepts are somewhat sophisticated, and I’ve chosen to postpone further elaboration for a future article. The point is simply that this option exists as a possible counterpoint, and that it’s related to some concepts from epistemology and Bayesian statistics and aims to minimize both the frequency and degree to which we’re mistaken on scientific questions.

That said, there’s an even more fundamental way in which the “scientists were wrong” argument falls apart: I can explain how this argument undermines its own premises. This approach targets an implicit logical inconsistency in the argument and gets right to the heart of why science is our best bet for the generation of reliable knowledge, and why the “science was wrong” argument itself unwittingly relies on that fact.

It is this option to which I’d like to direct our focus for the duration of this article.

The Science Denier’s Paradox

It goes something like this:

If someone rejects science, then they unwittingly undermine any basis upon which they could argue that “scientific consensus has been wrong before” as a justification for rejecting scientific conclusions they don’t like.

Why?

Because the scientific process itself was the means by which older scientific views were amended. So, if someone rejects that, then who are they to say that the more recent research which corrected the old view was any better or more reliable?

According to their own thesis, that research can’t be any more reliable than the older scientific views it helped correct or refine, because conceding to the provisional conclusions of the scientific evidence supposedly confers no special epistemic advantages over any other alleged path to knowledge.

This is the Science Denier’s Paradox.

Putting it another way, the “scientists were wrong” argument relies on the premise that older scientific theories were wrong, but the only reason we know they were wrong was via reiterative applications of the scientific process. To claim to know that those older theories were wrong is to implicitly admit that the process by which they were determined to be wrong is generating knowledge of superior reliability to that which preceded it. If it didn’t, then the contrarian would be in no position to claim that any those old theories were wrong.

The contrarian could of course still argue that changes in collective scientific knowledge were arbitrary and/or unreliable for some other convoluted reason, but to do so would logically require abandoning the premise that instances of self-correction in the history of science mean that the prevailing scientific views which preceded them were necessarily wrong. To acknowledge that such self-correction indicates that an earlier view was wrong is to acknowledge that the average net direction in which scientific knowledge changes is towards increasing accuracy.

In turn, to acknowledge that is to admit that any alternate method one might substitute in lieu of the scientific conclusions they’ve rejected is virtually certain to be of inferior reliability to the scientific approach. As such, the only way to properly discredit a prevailing scientific consensus is undertake research uncovering new evidence which cannot be reconciled with the prevailing theory, and/or introducing a superior interpretation which accounts for both the predictions the older view got right and the evidence which falsified it. Merely sitting back and proclaiming that scientists can be wrong isn’t going to cut it. You must show that they are, in fact, wrong in this particular instance, and making lame excuses for ignoring their findings isn’t going to accomplish that. At best, it might dupe some non-scientists and obfuscate public understanding of the issue. Granted, in many cases that’s all it was ever supposed to accomplish, but part of addressing public misconceptions is achieving clarity about the distortions which contribute to them.

Closing Thoughts

In some instances, these bad apologist arguments may be a result of a mere knowledge deficit, but psychological research has suggested that this explains only a tiny minority of cases.[13] Although a few communication techniques have been developed, researchers in the social sciences have been more successful in identifying what typically does not work for changing people’s beliefs than in formulating pragmatic protocols which do work reliably. For this reason, I won’t presume to prescribe procedures for you to reason your friends and family out of positions they didn’t reason themselves into to begin with. Logic and evidence are persuasive only to data-driven people, and such a disposition often requires a concerted effort to cultivate and habituate.

What I can do, however, is deconstruct some of the more common anti-science arguments and help achieve clarity on precisely how and why they fail from a logical empirical perspective. That’s all I’ve really aimed to accomplish here. Just be forewarned that what is most reasonable and what is most persuasive do not always coincide, and debates over which is more important date back at least as far as Plato’s dialogues. I won’t attempt to address that debate either except to state that I think both goals are important with respect to science communication. Getting through to the firmly entrenched by whatever means necessary is important, but so is encouraging those who already value a scientific approach to hone their analytical skills. I hope I’ve been able to achieve that here insofar as this family of anti-science apologist arguments is concerned.

One challenge I often run into with these kinds of articles is that many apologist arguments exhibit a feature I call fractal wrongness. That is to say that they are flawed on so many levels that a comprehensive refutation of all the different respects in which they are wrong becomes impractical for a single article (both for the writer and the reader). As a result, I often envision their rebuttals as this interconnected network of proverbial embedded drop-down menus within articles of intermediate scale in my brain, each consisting of a single article addressing a manageably-sized component of the fractally wrong structure. The intermediate scale articles and higher would then tie them together to a bird’s eye view of the bigger picture. A challenge with that is that it’s hard to encapsulate the proverbial embedded drop-down menu vibe in a single self-contained article without contextualizing it by including some reference to the higher order structuring of the network of interrelated articles and their subtopics. It’s a work in progress.

References

[1] Strickland, A. A., Taber, C. S., & Lodge, M. (2011). Motivated reasoning and public opinion. Journal of health politics, policy and law, 36(6), 935-944.

[2] Westen, D., Blagov, P. S., Harenski, K., Kilts, C., & Hamann, S. (2006). Neural bases of motivated reasoning: An fMRI study of emotional constraints on partisan political judgment in the 2004 US presidential election. Journal of cognitive neuroscience, 18(11), 1947-1958.

[3] Hahn, U., & Harris, A. J. (2014). What does it mean to be biased: Motivated reasoning and rationality. In Psychology of learning and motivation (Vol. 61, pp. 41-102). Academic Press.

[4] Washburn, A. N., & Skitka, L. J. (2018). Science denial across the political divide: Liberals and conservatives are similarly motivated to deny attitude-inconsistent science. Social Psychological and Personality Science, 9(8), 972-980.

[5] Kraft, P. W., Lodge, M., & Taber, C. S. (2015). Why people “don’t trust the evidence” motivated reasoning and scientific beliefs. The ANNALS of the American Academy of Political and Social Science, 658(1), 121-133.

[6] Blowing smoke: Annihilating fallacious comparisons of biotech scientists to tobacco company lobbyists.. (2015). The Credible Hulk. Retrieved 20 January 2020, from https://www.crediblehulk.org/index.php/2015/05/14/blowing-smoke-annihilating-the-fallacious-comparison-of-modern-biotech-scientists-to-tobacco-company-lobbyists/

[7] Oh yeah? Thalidomide! Where’s your science now?. (2020). Sciencebasedmedicine.org. Retrieved 20 January 2020, from https://sciencebasedmedicine.org/oh-yeah-thalidomide-wheres-your-science-now/

[8] How the Ancient Greeks Knew the Earth Was Round. (2020). Curiosity.com. Retrieved 20 January 2020, from https://curiosity.com/topics/how-the-ancient-greeks-knew-the-earth-was-round-curiosity/

[9] Peterson, T. C., Connolley, W. M., & Fleck, J. (2008). The myth of the 1970s global cooling scientific consensus. Bulletin of the American Meteorological Society, 89(9), 1325-1338.

[10] Why The Asbestos Gambit Fails. (2017). The Credible Hulk. Retrieved 20 January 2020, from https://www.crediblehulk.org/index.php/2017/05/05/why-the-asbestos-gambit-fails/

[11] Asimov, I. (1989). The relativity of wrong. The Skeptical Inquirer, 14(1), 35-44.

[12] Incommensurability, The Correspondence Principle, and the “Scientists Were Wrong Before” Gambit. (2018). The Credible Hulk. Retrieved 20 January 2020, from https://www.crediblehulk.org/index.php/2018/01/05/incommensurability-the-correspondence-principle-and-the-scientists-were-wrong-before-gambit/

[13] Simis, M. J., Madden, H., Cacciatore, M. A., & Yeo, S. K. (2016). The lure of rationality: Why does the deficit model persist in science communication?. Public Understanding of Science, 25(4), 400-414.

Why People Don’t Trust the Evidence- Motivated Reasoning and Scientific Beliefs – Kraft et al